Streamlined Fine Tuning AI Transformer Model with Financial Sentiment Data

1 July, 2024

In the ever-evolving landscape of financial technology, the ability to accurately interpret and respond to market sentiment is crucial. Traditional methods of sentiment analysis often require extensive datasets and complex coding, but recent advancements in AI models have significantly streamlined this process. Today, we explore how cutting-edge AI models can be fine-tuned efficiently with minimal labeled data, transforming the way we analyze financial sentiment.

Leveraging PEFT for Efficient Fine-Tuning

Introducing Parameter-Efficient Fine-Tuning (PEFT), a revolutionary approach that allows for rapid and precise model adjustments with just a few lines of code. This method builds on the capabilities of the Hugging Face Transformers library, providing a more efficient alternative to older frameworks like SetFit. The focus of this exploration will be on fine-tuning a Large Language Model (LLM) to understand sentiment in the bond market, a crucial aspect of financial analysis.

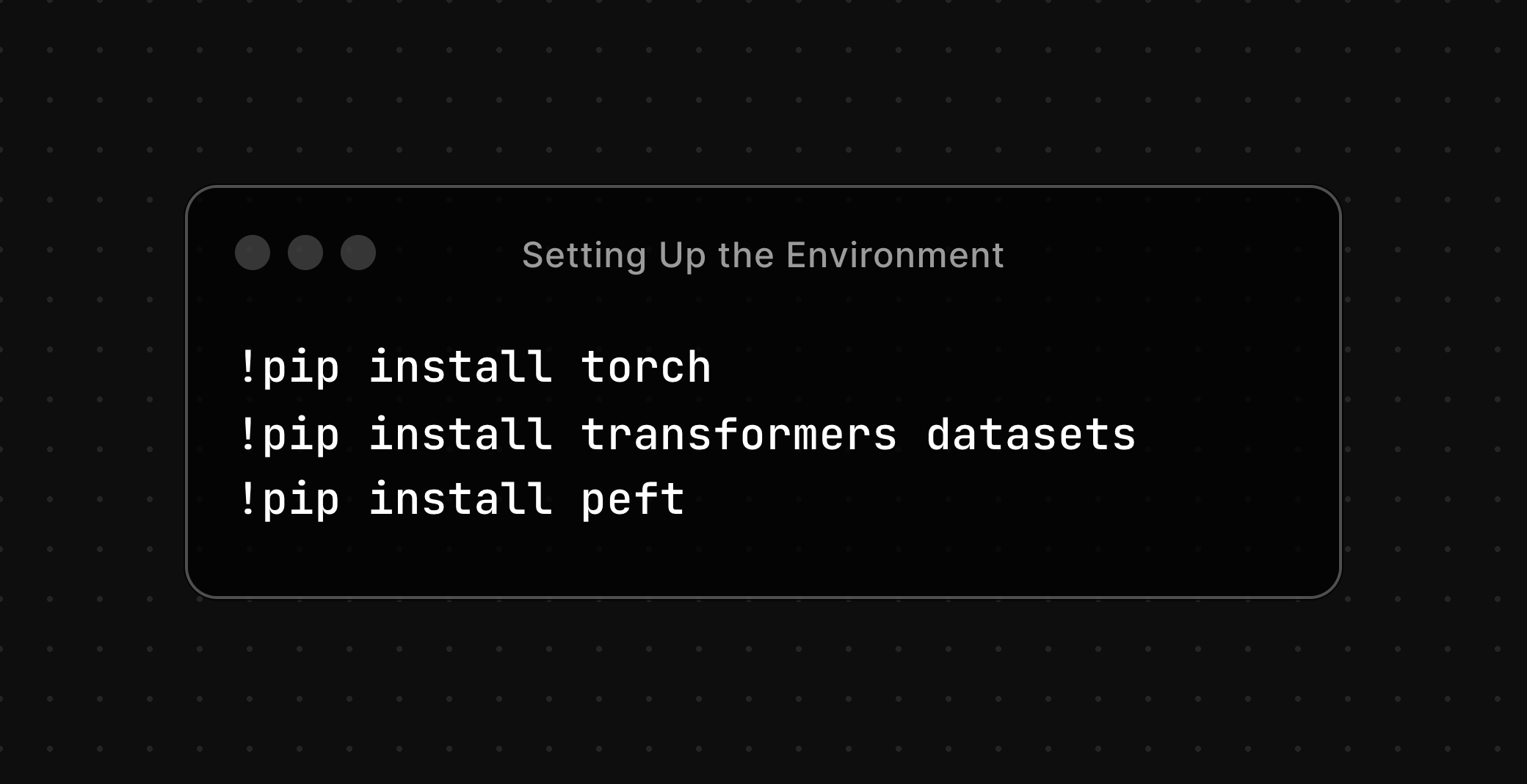

Setting Up the Environment

To begin, we need to set up our environment with the necessary libraries. The following commands will download and install the required tools:

Installation of Required Libraries:

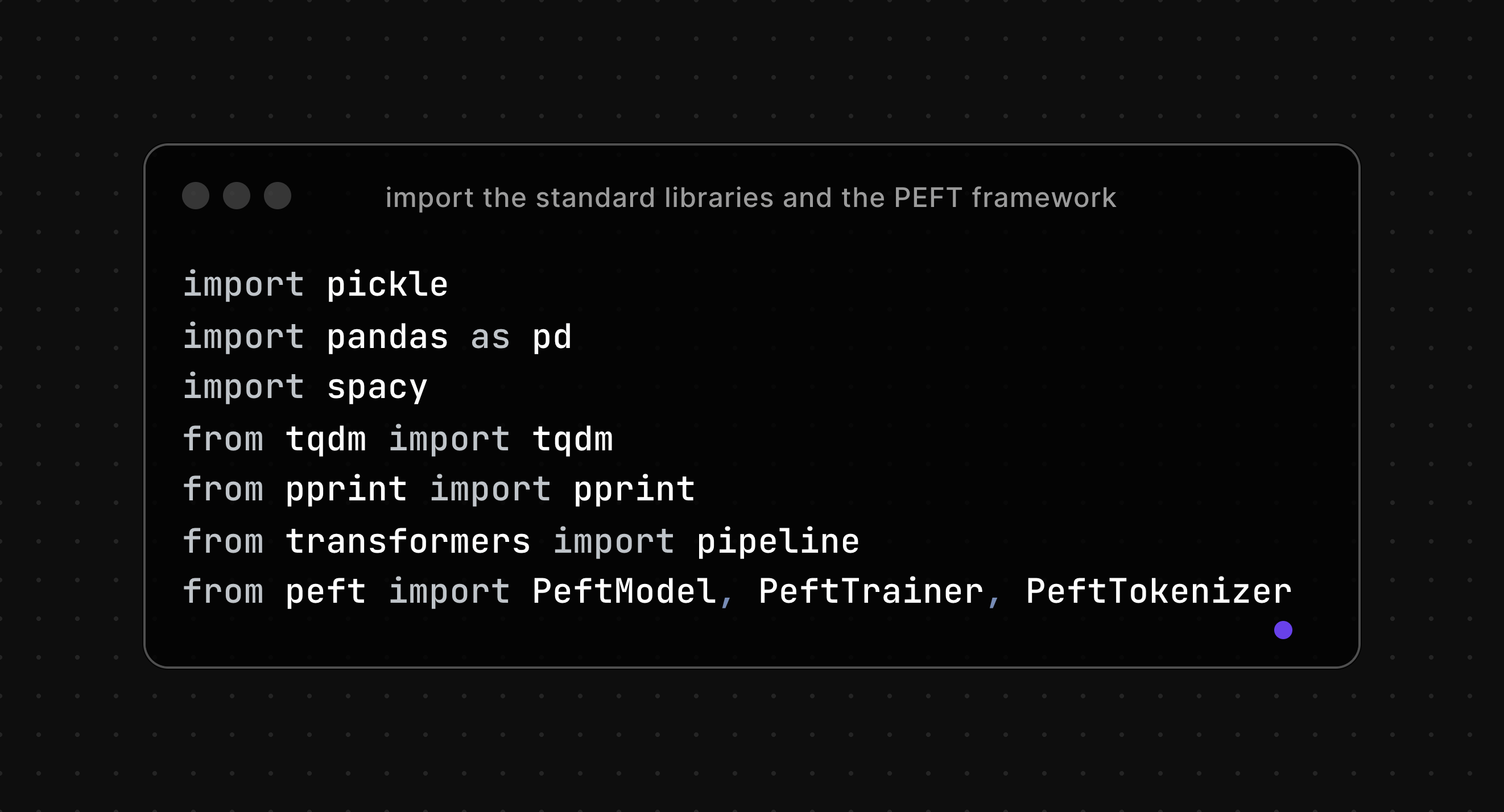

Next, we import the standard libraries and the PEFT framework:

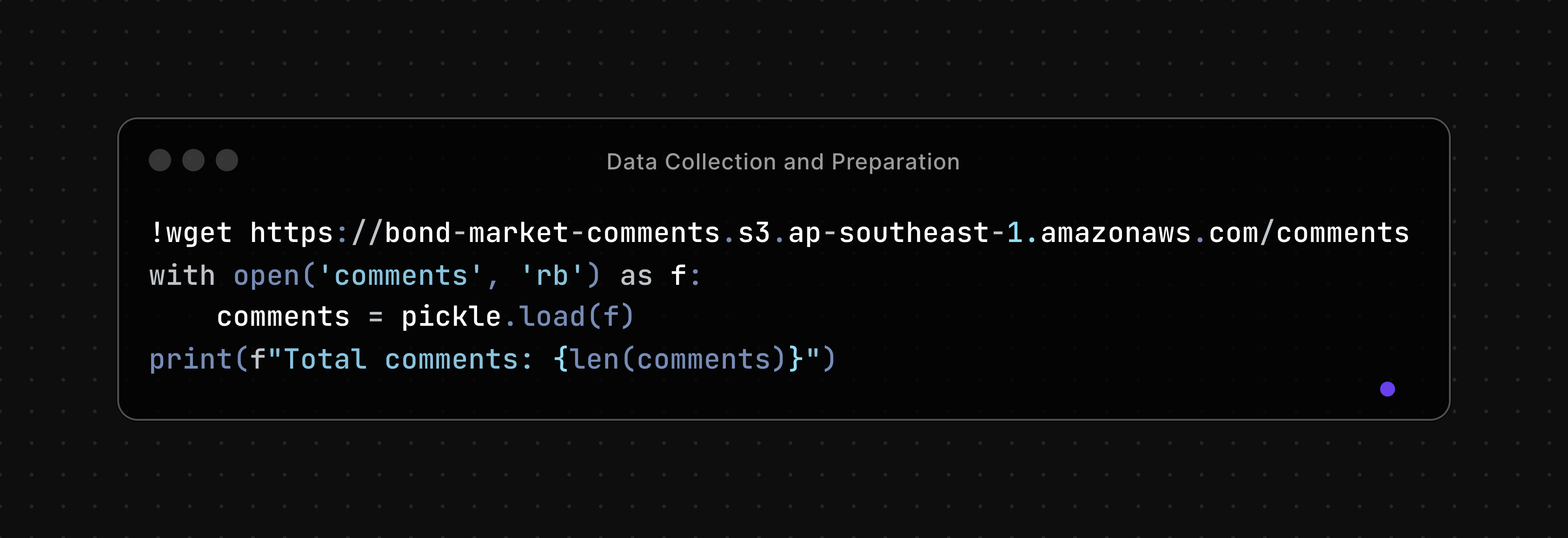

Data Collection and Preparation

For this experiment, we utilize a dataset of market comments focused on bond markets. The dataset comprises comments from various financial analysts and market observers.

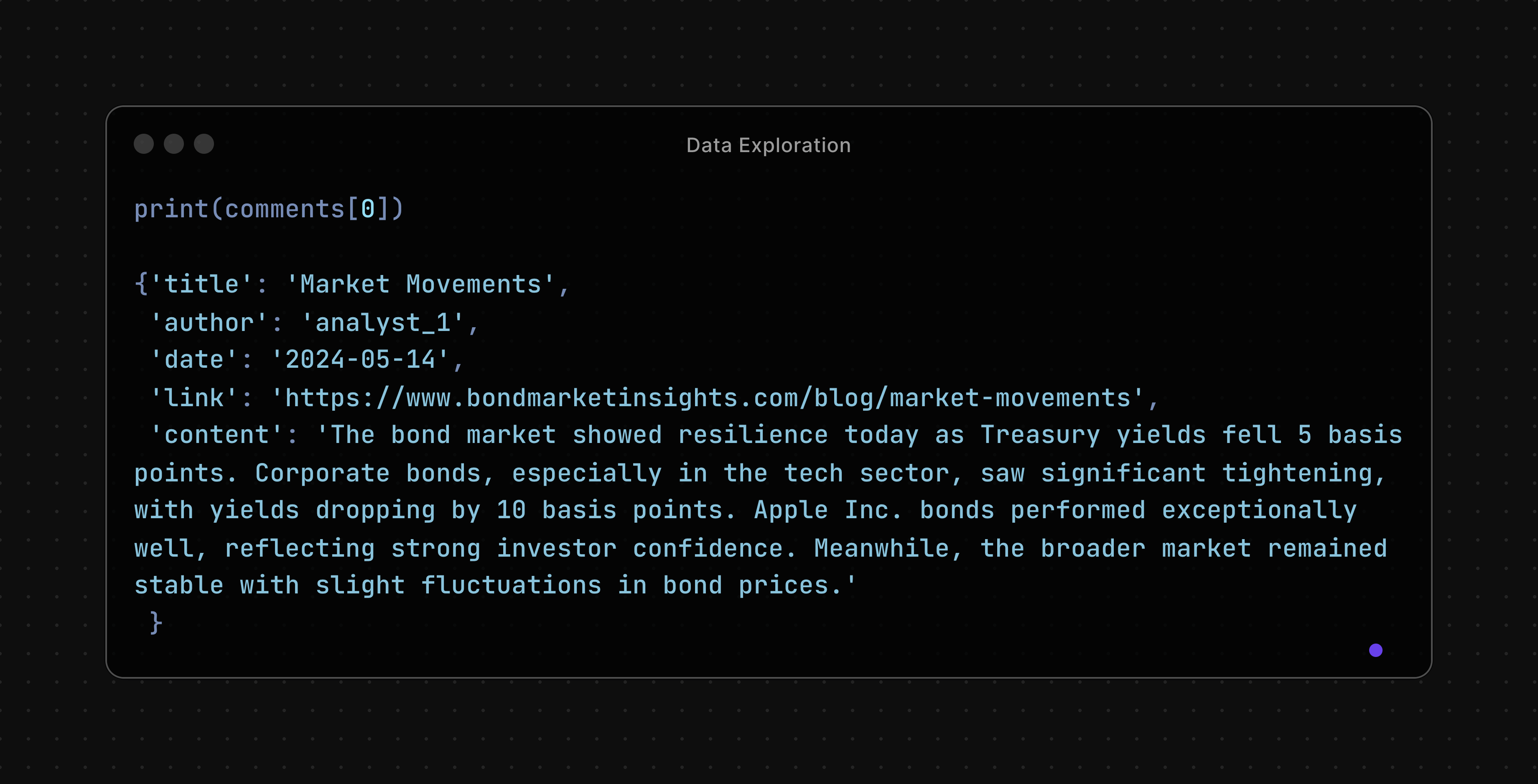

Data Exploration

Let's explore a sample comment to understand the structure of our dataset:

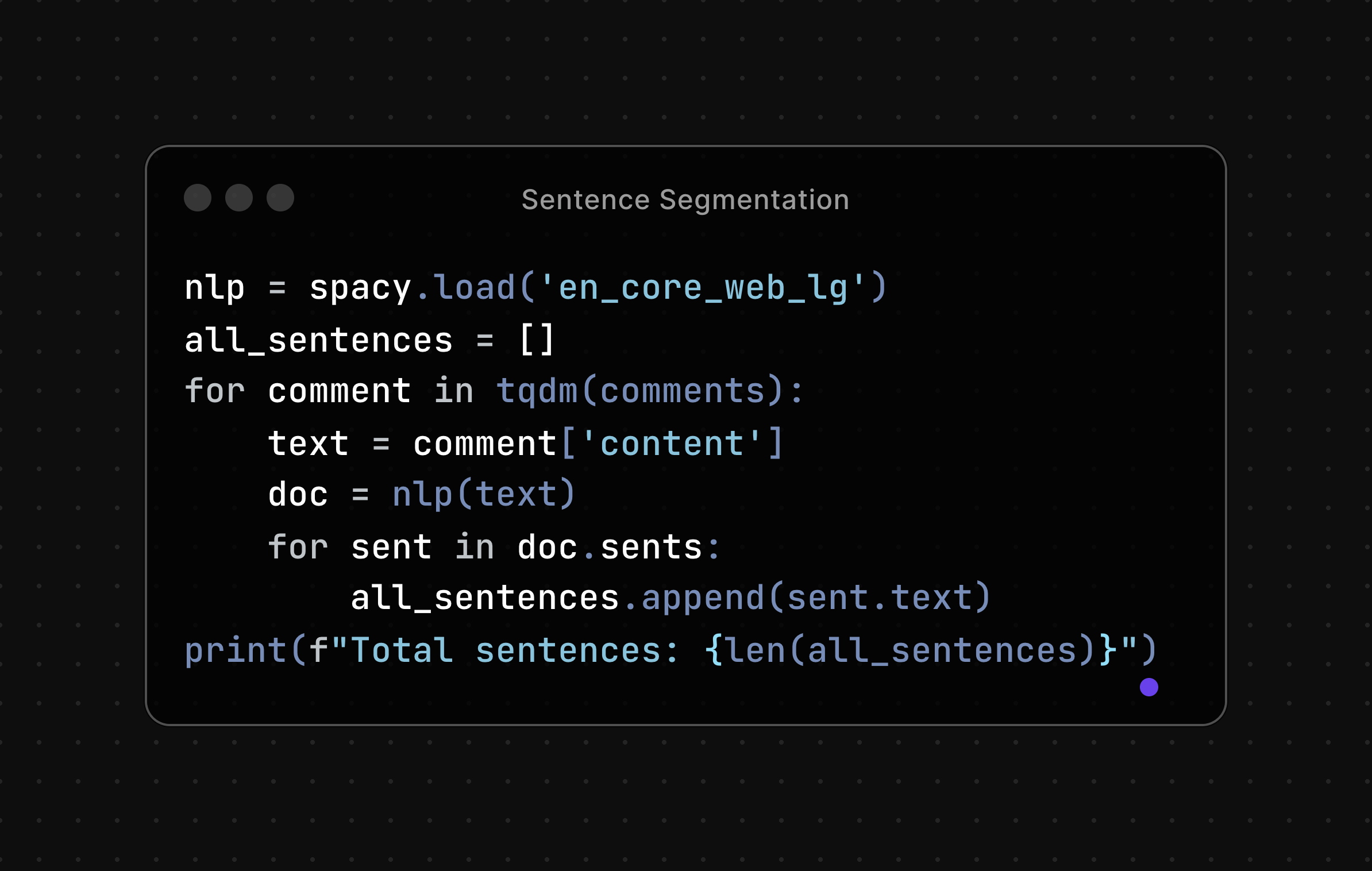

Sentence Segmentation

We split the comments into individual sentences for granular analysis:

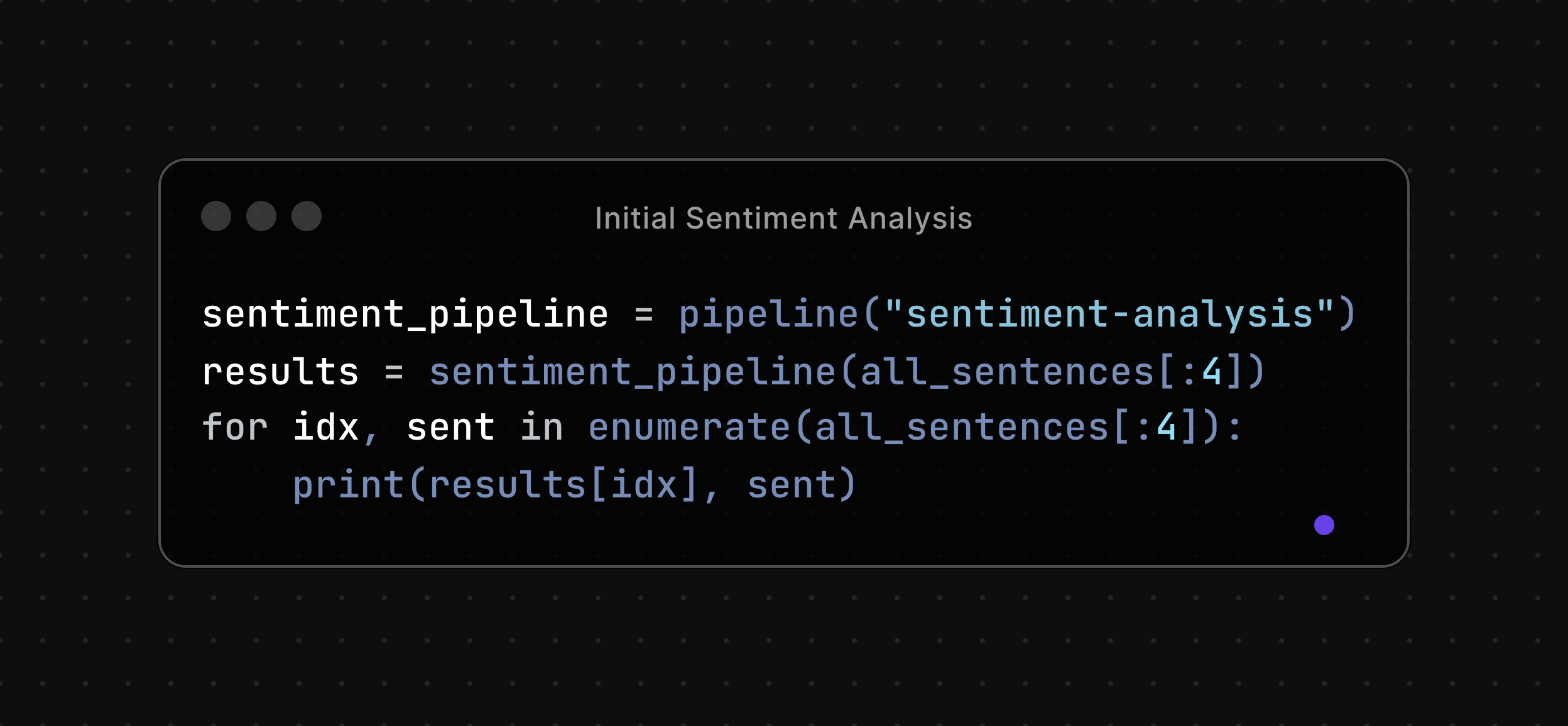

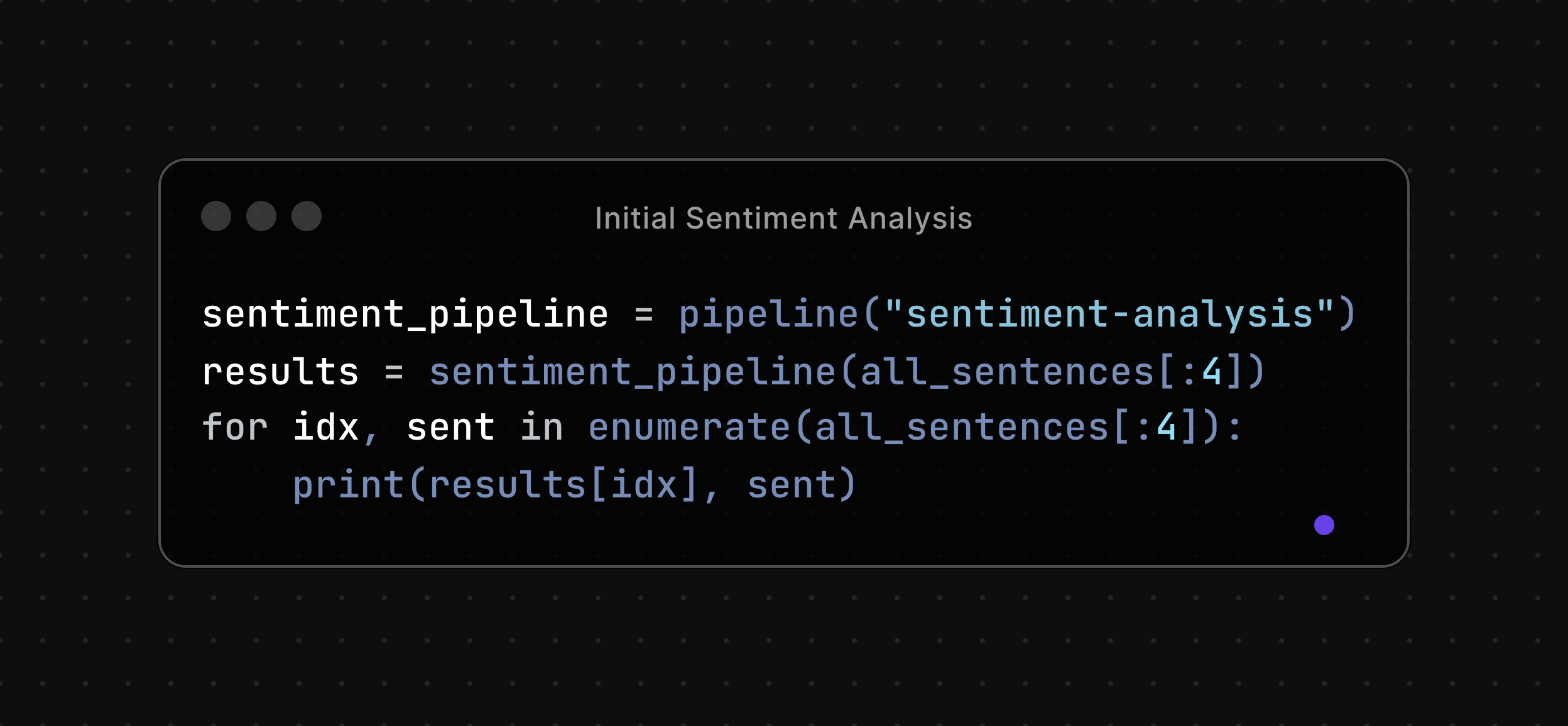

Initial Sentiment Analysis

Using a pre-trained sentiment analysis pipeline from Hugging Face, we can conduct an initial sentiment assessment:

Fine-Tuning with PEFT

We now move on to fine-tuning our model using PEFT. First, we define a training dataset with labeled sentences:

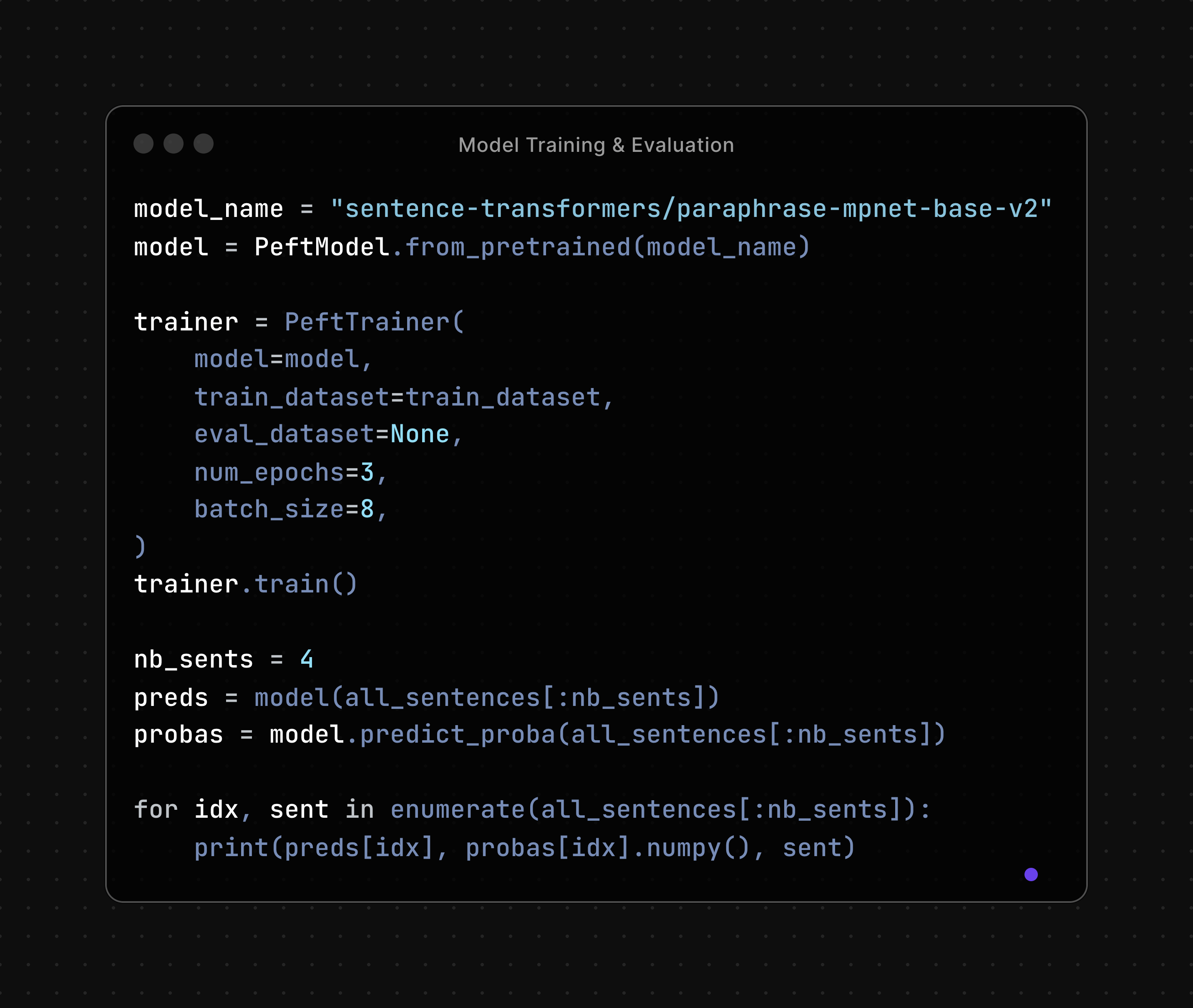

Model Training and Evaluation

We import a pre-trained Sentence-Transformers model and fine-tune it using PEFT:

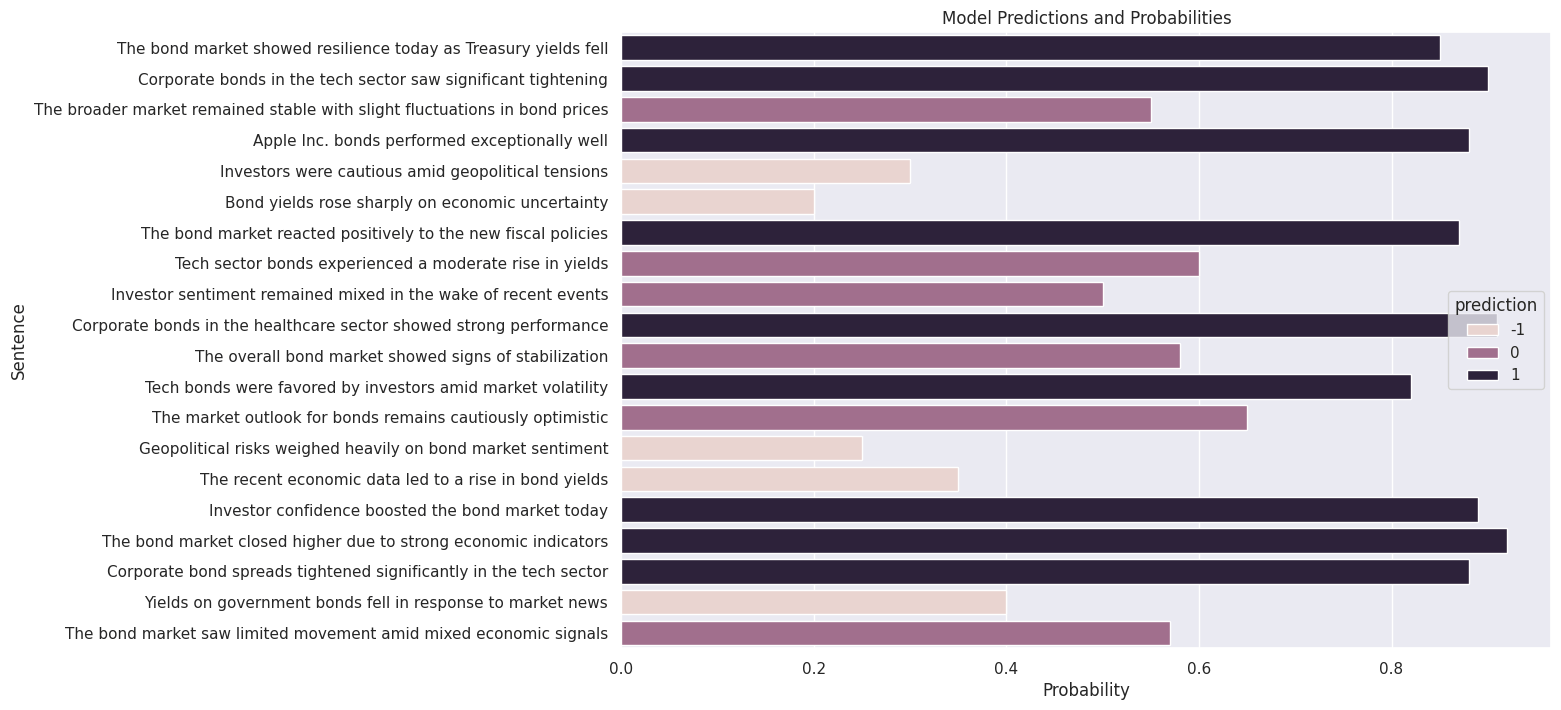

The visualization below shows the model predictions and their respective probabilities:

Conclusion

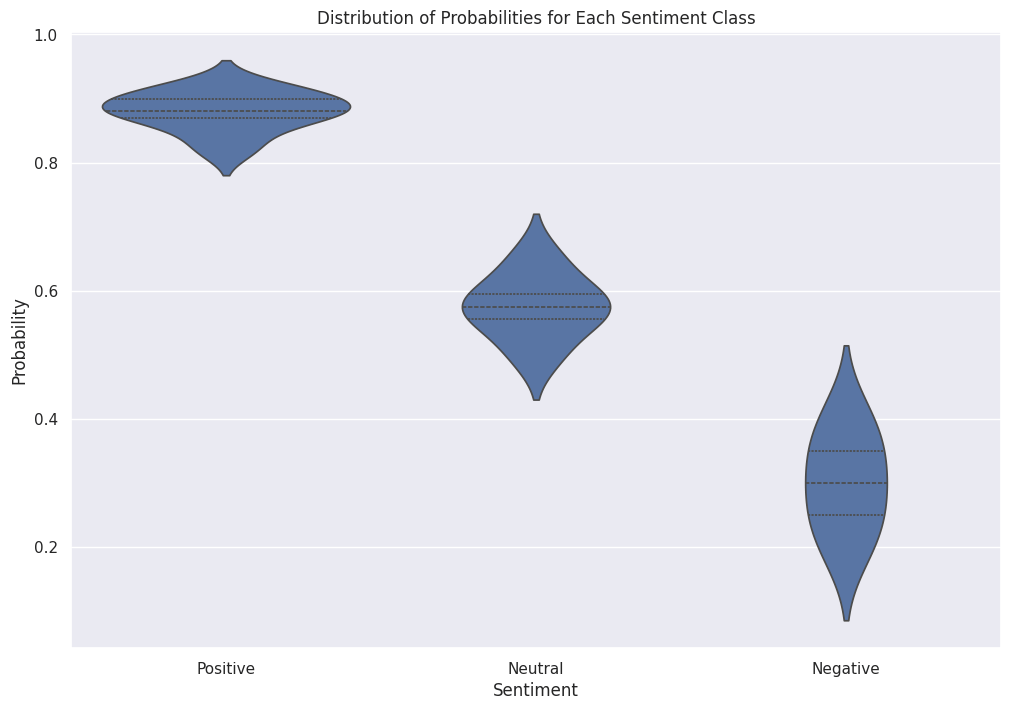

PEFT presents a promising approach for fine-tuning large language models to specific use cases with minimal labeled data. This methodology significantly enhances the efficiency and accuracy of sentiment analysis in financial markets. By focusing on the bond market, we demonstrate the model's ability to adapt and deliver precise sentiment predictions, paving the way for more informed investment decisions.

The journey from raw text to actionable insights is now faster and more efficient than ever, thanks to advancements in AI and natural language processing. As financial markets continue to evolve, so too must our tools for understanding them. With PEFT, we are well-equipped to meet these challenges head-on.